Should I really target Kopf-Lischinski?

Before vote and choose any option, know that this ain’t democracy and I don’t promise to follow the winner, but I’m interested in the popular option because I care. Also, before vote anything, read the rest of this post to understand the consequences of each choice.

How does the full Kopf-Lischinski algorithm looks like? Just like the image below:

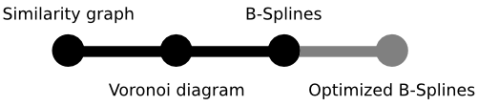

Like it? Me too. How close is libdepixelize of getting this result? This depends on what libdepixelize will aim to, but let’s skip this discussion for later. First, the below image has an ultra-summarized answer to this question:

Like it? Now look some images generated by libdepixelize for the same input used to generate the image above:

The output from the left is buggy by Kopf-Lischinski and my own standard. If this result were correct, then it should group these two gray blocks in a single block and I should code a bit more to make sure this happen.

The output from the right is “innovative” and I’m coding since the beginning to preserve as much color information as possible and I don’t need to fix anything, but I need to extend Kopf-Lischinski algorithm to handle the “unfilled” space between two (non-)grouping blocks. The result wouldn’t be Kopf-Lischinski, but it’d preserve more color information and if Kopf-Lischinski algorithm is ever going to be patented, we’d have a different algorithm (partially patent-free).

Now let me explain the technical details using simple and summarized language. Kopf-Lischinski algorithm uses a similarity graph to group blocks of similar color aiming two results:

- Define a new shape to the image (and I very much like the result)

- Remove colors (and I very dislike this choice)

The algorithm remove color information to simplify the vectorization process, but I came all this way without being limited by its “not that much increased complexity” and I think I can extend the algorithm to include descriptions of how to handle more color information in these final steps.

If you think the “non-enhanced” version would be easy to implement, it’s because you haven’t thought about any image where a gradient is present. Close blocks of colors would have similar colors, but distant blocks would have different colors anyway, so there is not an easy way to choose which blocks should be grouped. Most of the ideas I thought about (since the beginning of the project) would have different results depending on the “iteration order” and as such I consider this a too “fragile”/unstable algorithm. The only “correct” solutions I can think of at the moment are too expensive and based on the benchmark present in the paper, I doubt them were chosen.

Keep in mind that any path I choose will bring me code to produce.

Comentários